JUnit Tests Generation

This practice is a specialization of the Java Analysis, Visualization & Generation Practice for generation of JUnit tests. In particular:

- Generation of tests for methods or classes with low test coverage

- Leveraging Gen AI such as OpenAI ChatGPT or Azure OpenAI Service for test generation

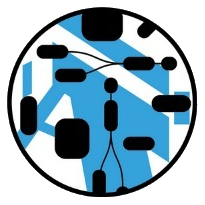

The above diagram shows Java development activities and artifacts. Black arrows show the typical process, blue arrows show the test generation loop.

The developer produces source artifacts which may include non-java artifacts used to generate Java code (e.g. Ecore models), “main” Java sources and test Java sources. Java sources are compiled into bytecode (class files). Here it is important to note that matching of bytecode classes and methods to source code classes and methods might be non-trivial because of:

- Lambdas

- Anonymous and method-scope classes

- Annotation processors like Lombok

JUnit tests are compiled and executed. If code coverage, such as jacoco, is configured then test execution produces coverage data. Jacoco stores coverage data in jacoco.exec file. This file is used to generate a coverage report and upload coverage information to systems like SonarQube. In this practice it is also used to select which methods to generate tests for based on coverage data.

This diagram provides an insight into the test generation activity:

- Coverage data and bytecode are used as input to load the Coverage model.

- Source files, the coverage model, and bytecode (optional) are used to load the Java model of source code.

- The generator traverses the model and generates unit tests for method with low coverage using a combination of programmatic (traditional) generation and Gen AI. Tests are generated as a Java model as well and then are delivered to the developer for review, modification, and inclusion into the unit test suite.

The following section provides an overview of two “local loop” reference implementations (a.k.a. designs/embodiments) - all-in-one and componentized. There are many possible designs leveraging different alternatives at multiple variation points. The sections after the reference implementations section provide an overview of variation points, alternatives, and factors to take into consideration during alternative selection.

- Command line

- Reference Implementations

- Variation points and alternatives

Command line

Nasdanika CLI features JUnit command which generates JUnit tests as explained above.

Reference Implementations

This section explains reference implementations

All-in-one

All-in-one generations is implemented as a JUnit test is available in TestGenerator. An example of tests generated by this generator - PetControllerTests.

As the name implies, all steps of source analysis and generation are implemented in a single class and are executed in one go.

Componentized

Componentized test generation which is also executed in one go is implemented in these classes:

- TestJavaAnalyzers - loads sources, coverage, and inspectors, passes the sources to the inspectors, aggregates and saves results.

- Coverage Inspector - generates tests for methods with low coverage leveraging TestGenerator capability provided by OpenAITestGenerator.

Repository scan/crawl

TestGitLab demonstrates how to scan a source repository (GitLab) using REST API, inspect code, generate unit tests, commit them to the server (also over the REST API) and create a merge request. This implementation does not use coverage information, its purpose is to demonstrate operation over the REST API without having to clone a repository, which might be an expensive operation. The implementation uses GitLab Model to communicate with the repository. It uses Java model to load sources and StringBuilder to build test cases.

Variation points and alternatives

As you have seen above, you can have an AI-powered JUnit test generator in about 230 lines of code, and maybe it would all you need. However, there are many variation points (design dimensions), alternatives at each point and, as such, possible permutations of thereof (designs). This section provides a high level overview of variation points and alternatives. How to assemble a solution from those alternative is specific to your context and there might be different solutions for different contexts and multiple solutions complementing each other. As you proceed with assembling a solution, or a portfolio of solutions, you may identify more variation points and alternatives. To manage the complexity you may use:

- Enterprise Model for general guidance,

- Capability framework or Capability model to create a catalog of variation points and alternatives and compute solutions (designs) from them

- Decision Analysis to select from the computed list of designs

- Flow to map your development process AS-IS and then augment it with test generation activities at different points.

In this section we’ll use the below diagram and the concept of an Enterprise with Stakeholders performing activities and exchanging Messages over Channels.

The mission of our enterprise is to deliver quality Java code. The loss function to minimize is loss function = cost * risk / business value. For our purposes we’ll define risk as inversely proportional to tests coverage risk = missed lines / total lines - that’s all we can measure in this simple model. The cost includes resources costs - salary, usage fees for OpenAI.

Below is a summary of our enterprise:

- Stakeholders & Activities:

- Developer - writes code

- Build machine - compiles code and executes tests

- Test generator - generates unit tests

- GenAI - leveraged by the Test Generator

- Messages:

- Source code

- Bytecode

- Coverage results

- Prompt to generate a test

- Generated tests

- Channels

- Developer -> Build Machine : Source code

- Developer -> Test Generation : Source code

- Build Machine -> Test Generator : Coverage results, possibly with bytecode

- Test Generation -> Developer : Generated tests

- Test Generation - GenAI : Prompt

The below sections outline variation points and alternatives for the list items above

Stakeholders & Activities

Developer

A developer writes code - both “business” and test. They use some kind of an editor, likely an IDE - Eclipse, IntelliJ, VS Code. Different IDE’s come with different sets of plug-ins, including AI assistants. Forcing a developer to switch from their IDE of preference to another IDE is likely to cause considerable productivity drop, at least for some period of time, even if the new IDE is considered superior to the old IDE. So, if you want to switch to another IDE just because it has some plug-in which you like - think twice.

Build machine

A build machine compiles code and executes tests. Technically, compilation and test execution may be separated in two individual activities. We are not doing it for this analysis because it doesn’t carry much relevance to test generation. You can do it for yours.

Test generator

Test generator generates tests by “looking” at the source code, bytecode, and code coverage results.

Because the source code is a model element representing piece of code (method, constructor, …), the generator may traverse the model to “understand” the context. E.g. it may take a look at the method’s class, other classes in the module. If the sources are loaded from a version control system, it may take a look at the commits. And if the source model is part of an organization model, it may look at “sibling” modules and other resources.

By analyzing source and bytecode the generator would know methods a given method calls, objects it creates, and also it would know methods calling the method. It will also “know” branch conditions, e.g. switch cases. Using this information the generator may:

- Generate comments to help the developer

- Generate mocks, including constructor and static methods mocks

- Generate tests for different branches

- Build a variety of prompts for GenAI

The test generator may do the following code generated by GenAI:

- Add to generated test methods commented out - as it is done in the reference implementations

- “Massage” - remove backticks, parse, add imports - generated and implied.

In addition to code generation the generator may ask GenAI to explain code and generate recommendations - it will help the developer to understand the source method and possibly improve it along the way. It may also generate dependency graphs and sequence diagrams.

GenAI

There may GenAI models out there - cloud, self hosted. Which one to use heavily depends on the context. For example, if you have a large codebase with considerable amount of technical debt having an on-prem model may be a good choice because:

- You may fine-tune it.

- Even if you don’t have tons of GPU power and your model is relatively slow you can crawl you code base, generate tests and deliver them to developers for review and inclusion into test suites.

In this scenario your cost is on-prem infrastructure and power. Your savings are not having to pay for GenAI in the cloud and developer productivity if your fined tuned model turns out to be more efficient than a “vanilla” LLM.

There are many other considerations, of course!

Messages

In this section we’ll take a look just at bytecode and coverage results delivered to the test generator. The generator operates on models. As such, bytecode and coverage results can be delivered in a “raw” format to be loaded to a model by the generator, or pre-loaded to a model and saved to a file. The second option results in fewer files to pass to the test generator. The model file can be in XMI format or in compressed binary. The XMI format is human-readable, the binary format takes less space on disk.

Channels

Developer -> Build Machine/Test Generation : Source code

For local development the build machine is the same machine where developer creates sources. The test generator is also executed on the developer’s workstation. As such, the delivery channels is the file system.

In the case of CI/CD pipeline/build server such as Jenkins or GitHub Actions, a version control systems is the delivery channel.

Build Machine -> Test Generator : Coverage results, possibly with bytecode

The test generator needs coverage results. If the coverage results are delivered in the raw form, it also needs bytecode (class files) to make sense of the results.

Coverage results can be delivered to the test generator using the following channels:

- Filesystem

- Jenkins workspace made available to the test generator over HTTP(S)

- Binary repository. For example, coverage results might be published to the Maven repository as an assembly along with sources, jar file, and javadoc. They can be published in a raw format or as a model. In this modality the tests generator can get everything it needs from a Maven repository. You can use Maven model or Maven Artifact Resolver API to work with Maven repositories. See also Apache Maven Artifact Resolver. Additional value of storing coverage data in a binary repository is that it can serve as an evidence of code quality stored with the compiled code, not in some other system.

- Source repository. Traditionally storing derived artifacts in a source repository is frowned upon. However, storage is cheap, GitHub Pages use this approach - so, whatever floats your boat!

- SonarQube - it doesn’t store method level coverage, so the solution would have to operate on the class level and generate test methods for all methods in a class with low coverage.

- You may have a specialized application/model repository/database and store coverage information there, possibly aligned to your organization structure.

Test Generation -> Developer : Generated tests

The goal is to deliver generated tests to the developer, make the developer aware that they are available, and possibly track progress of incorporating the generated tests into the test suite. With this in mind, there are the following alternatives/options:

- Filesystem - for the local loop

- Source control system - commit, create a merge/pull request. When using this channels you can check if there is already a generated test and whether it needs to be re-generated. If, say, the source method hasn’t changed (the same SHA digest), and the generator version and configuration hasn’t changed - do not re-generate, it will only consume resources and create “noise” - the LLM may return a different response, developers will have to spend time understanding what has changed. You may fork a repository instead of creating a branch. This way all work on tests will be done in the forked repository and the source repository will receive pull requests with fully functional tests. Tests can be generated to a separate directory and then copied to the source directory, or they can be generated directly to the source directory. Tests may be generated with

@Disabledannotation so they are clearly visible in the test execution tree, and with@Generatedannotation to track changes and merge generated and hand-crafted code. - Issue tracking system - either attach generated tests to issues, or create a merge request and reference it from the generated issues. In systems like Jira you may create a hierarchy of issues (epic/story), assign components, labels, fix versions, assignees, etc. You may assign different generated tests to different developers based on size and complexity of the source method. E.g. tests for short methods with low complexity can be assigned to junior developers. This alone may give your team a productivity boost!

- E-mail or other messaging system.

Issue trackers and messaging systems may be used to deliver generated documentation while source control will deliver generated tests. Developers will use the generated documentation such as graphs, sequence diagrams and GenAI explanations/recommendations in conjunction with the generated test code.

This channel may implement some sort of backpressure by saying “it is enough for now”, as a human developer would by crying “Enough is enough, I have other stories to work on in this sprint!”. Generating just enough tests is beneficial in the following ways:

- Does not overwhelm developers

- Does not result in a stale generated code waiting to be taken a look at

- Does not waste resources and time to generate code which nobody would look at in the near future

- Uses the latest (and hopefully the greatest) generator version

With backpressure a question of prioritization/sorting arises - what to work on first? Source methods can be sorted according to:

- Size/complexity

- Dependency. E.g. method b (caller) calls method a (callee)

One strategy might be to work on callee methods first (method a) to provide a solid foundation. Another is to work on caller methods first because callee methods might be tested along the way.

These strategies might be combined - some developers (say junior) may work on callee tests and senior developers may be assigned to test (complex) caller (top level) methods. Also, the top-down approach (callers first) might be better for addressing technical debt accrued over time, while bottom-up (callees first) for new development.

Test Generation - GenAI : Prompt

GenAI is neither free nor blazing fast. As such, this channel may implement:

- Billing

- Rate limiting (throttling)

- Budgeting - so many calls per time period

- Caching

Nasdanika

Nasdanika